By Rebecca E. Jones

Three assistant professors in the Department of Computer Science (CS) in Virginia Commonwealth University’s School of Engineering are developing algorithms that can help the online news and social networking service Twitter detect attitudes and feelings, and even identify hate speech. Bartosz Krawczyk, Ph.D., Bridget McInnes, Ph.D., and Alberto Cano, Ph.D., have designed a robust system to computationally determine whether a tweet is positive or negative. This process, called sentiment analysis, is valuable to marketers and political consultants. It is also used to monitor social phenomena and spot potentially dangerous situations.

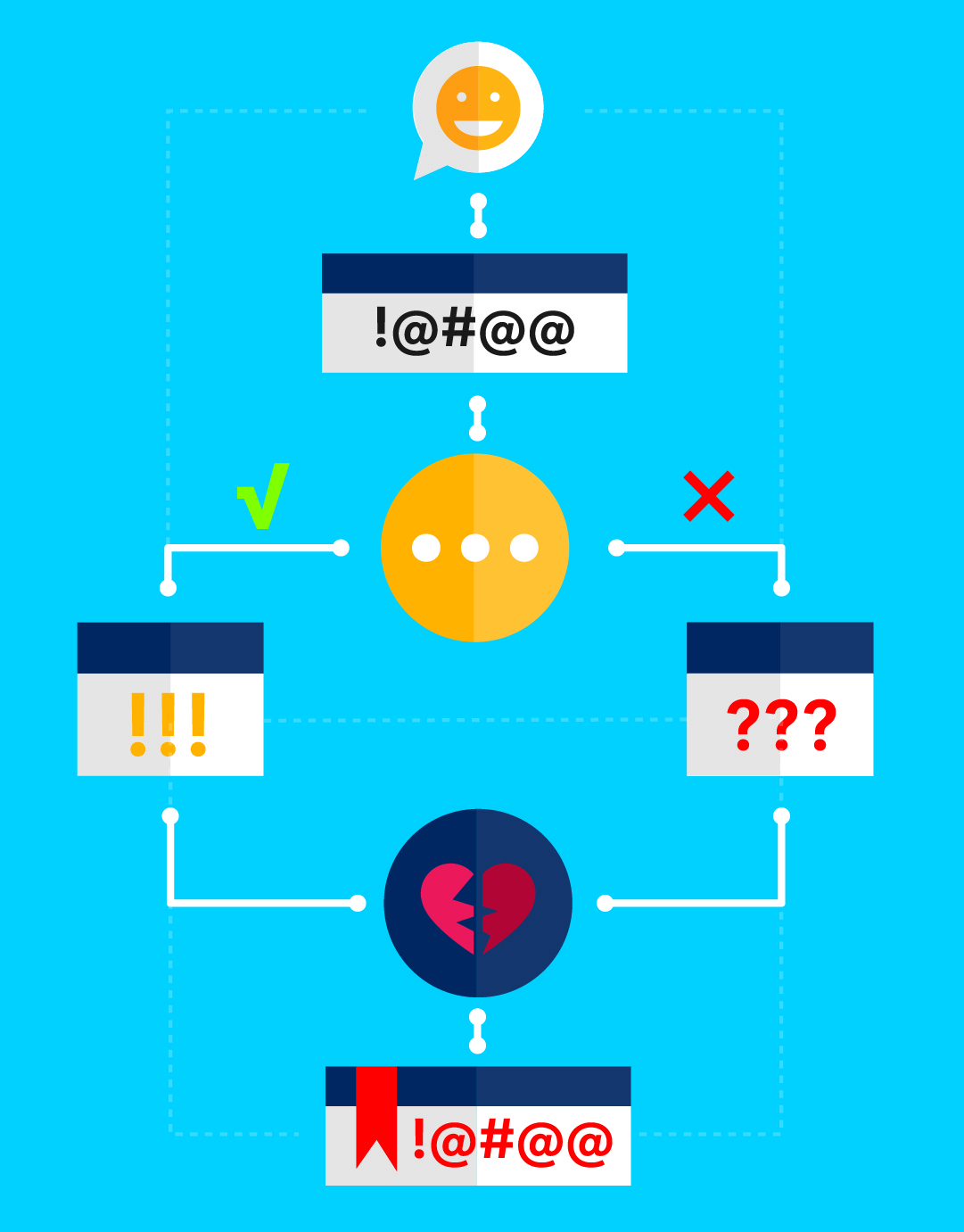

Sentiment analysis is more complicated than it may seem, especially in social media where high traffic, misspellings and emojis — not to mention sarcasm — abound. Is a tweet that says, “Gee, that’s great” sincere or shady? Their system can tell because it leverages the distinct specialties of each researcher. Krawczyk brings expertise in algorithms that learn from and make predictions on data. McInnes is a specialist in interactions between computers and human languages. Cano specializes in big data, essential for the 6,000-tweets-per-second Twitter space.

The result is a system that is far more agile and accurate than the sentiment analysis programs currently on the market. The VCU team’s algorithms also have the ability to become more effective over time. “The novelty of our algorithms is that they can adapt and generate new knowledge,” Krawczyk said. This is because they are “interpretable,” meaning they can make a decision and explain why the decision was made — an asset in an environment where hackers and trolls are prevalent

“In the future, this type of system could be used to catch incidents like what happened to the Microsoft chatbot,” McInnes said, referring to the 2016 “Tay” bot the company had to recall just 16 hours after its release when trolls hijacked it and taught it to tweet offensive messages.

Undergraduate computer science majors are also participating in the team’s research. Abigail Byram is working with Cano and McInnes on multilabel classification, a problem where multiple labels may be assigned to one item in a dataset. Andriy Mulyar is helping Krawczyk and McInnes deal with datasets that are sharply skewed towards one class, a situation that can confuse machine learning programs and cause mistakes. Both are participating under the Dean’s Undergraduate Research Initiative (DURI) program, which provides undergraduates the opportunity to conduct research in VCU labs.