Cang Ye, Ph.D., professor in VCU’s Department of Computer Science, is an internationally recognized inventor of assistive robotics for people with visual impairments, but that wasn’t his original plan.

“My research area is autonomous navigation, so I thought I would go into self-driving cars,” he said. “But self-driving cars take a lot of infrastructure, and a lot of people are already working on them. I was looking for a different niche.”

That niche appeared — literally — while he was looking out a window and saw people using white canes to cross the street.

“I thought, ‘That’s a good idea. I could put my applications on a cane,’” Ye said.

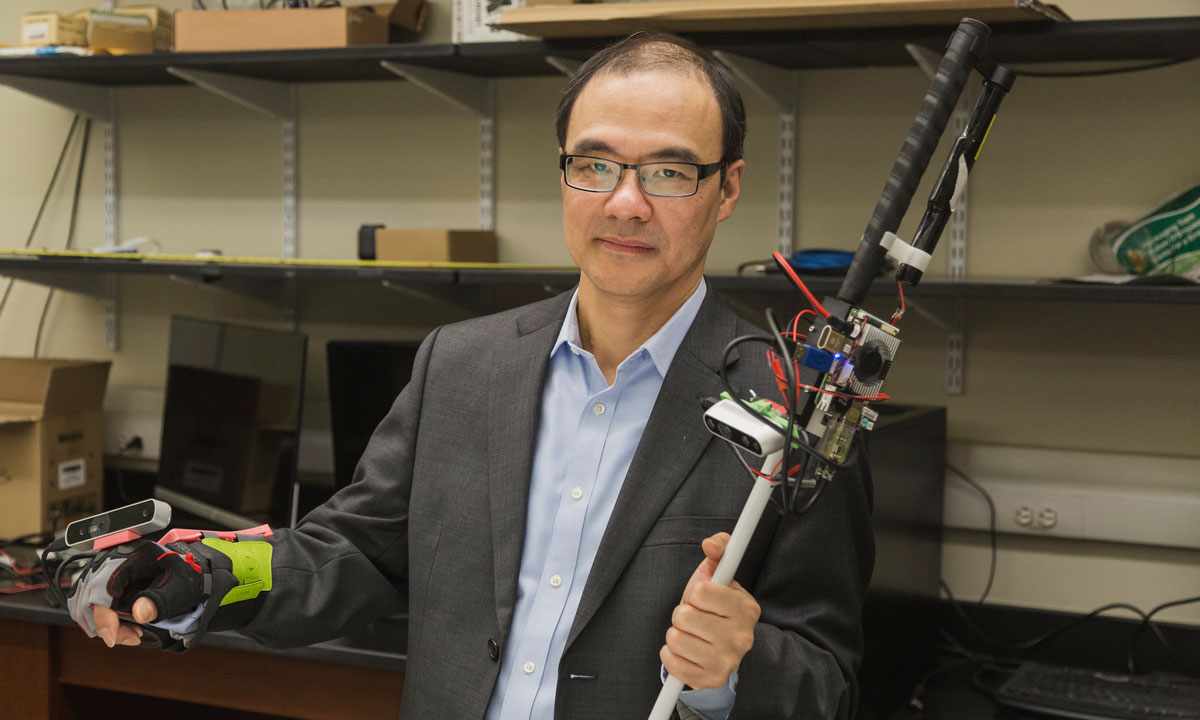

The result is a device he calls RoboCane, which optimizes the traditional white cane for the visually impaired.

The white cane has a roller tip that the user can sweep across the pathway to detect changes in level and texture. RoboCane lets users turn the tip into a “steering wheel” that guides them where they need to go.

RoboCane’s technology uses a 3D camera to measure distances and an interface that detects the user’s position in space. When the user gives a speech command, RoboCane uses a preloaded building floor plan and computer vision technology to map out a path.

“It knows where you are and where your destination is and is constantly recalculating both as you move,” Ye said. “It updates itself until you get where you are trying to go, and avoids obstacles.”

RoboCane is meant to complement, not replace, the white cane, so it can switch modes with a voice command, the press of a button — or even intuitively. If it’s in “RoboCane” mode and the user makes a sweeping motion to check the path surface, the motor will temporarily disengage. “Once it detects the human intent, it changes for you,” Ye explained.

With major funding from the National Institutes of Health’s National Eye Institute, Ye has completed the RoboCane prototype. He has also worked with the U.S. Department of Transportation to investigate indoor-outdoor applications such as going from home to all the way onto a bus or subway.

Ye’s newest project is a device that will help people with visual impairments navigate smaller — but equally challenging — terrain such as tabletops, doorways and coffee cups.

“The cane can get you to the door, but it can’t find the doorknob,” Ye said. “Now the person is facing a problem. ‘How do I know where the doorknob is? How do I get through the door?’”

Ye’s proposed solution is a new wearable. This robotic glove is known formally as a Wearable Robotic Object Manipulation Aid (W-ROMA). In Ye’s Robotics Laboratory, it's called “RoboGlove.”

Like RoboCane, it uses a tiny camera and object detection technology. Based on the images it receives, the glove calculates the misalignment between your hand and the target object.

“We built an actuator that can push your thumb to move your hand in the right position,” Ye said. “In the natural shape of the hand, the thumb has a specific position. If something moves my thumb, the rest of my hand will follow it to restore the natural position. That’s the basic idea.”

Ye is also considering a less intrusive way to accomplish this. He is working with Yantao Shen, Ph.D., associate professor of electrical and biomedical engineering at the University of Nevada, to equip the glove with an electro-tactile capability that uses waves and currents to “touch” the user’s hand as it prepares itself to open a door or lift a coffee cup.

“This would replace mechanical ‘pings’ and the need for moving parts, because all you would need is electrical signals to emulate that touch sense,” Ye said. “If the glove sees a cup handle, it will gently shape your hand to reach and pick up the coffee.”